An unexpected side-quest with SwiftUI, OpenAI’s API, CloudKit and Swift Data, and what I learned about the limits and possibilities of AI in modern product development.

Not another vibe coder

You’re thinking, “Oh no, not another vibe-coding story.”

I get it. I’ve seen plenty of those. Someone asks an AI to build something, it spits out code, and they act shocked that it almost works.

But this isn’t that story. I’ve spent 20 years designing products, leading teams, advising companies, and shipping countless apps — some likely on your phone right now. I’ve worked at Apple, and developed a deep appreciation for turning innovative ideas into practical, human-centered products. This isn’t a story about what AI is going to replace, it’s about what AI can genuinely contribute to modern app development, where its limitations lie, and how we, as developers and product thinkers, can best leverage its strengths.

For example, last year I used AI to help me turn AirPods into fitness trackers to count push-ups using their built-in accelerometers. I love exploring the untapped potential in existing technology.

This post is part developer journal, part product exploration, and part practical guide to AI’s capabilities in product development. Because what happened in that single weekend (and the month that followed) taught me more about the future of building products than the previous six months of reading about AI.

Buckle up.

The journey of a thousand miles begins with a single prompt

It all started innocently enough.

I had an idea bouncing around in my head, and as has become a habit, I like capturing ideas in GPT. Writing things down helps me declutter my brain, especially when I’m trying to wind down for the night. Sometimes I come back to them later, develop them further, or archive them — but first, they need to get out.

Before going to bed, I quickly typed this into Claude so that I could revisit it in the morning:

And here is what it replied:

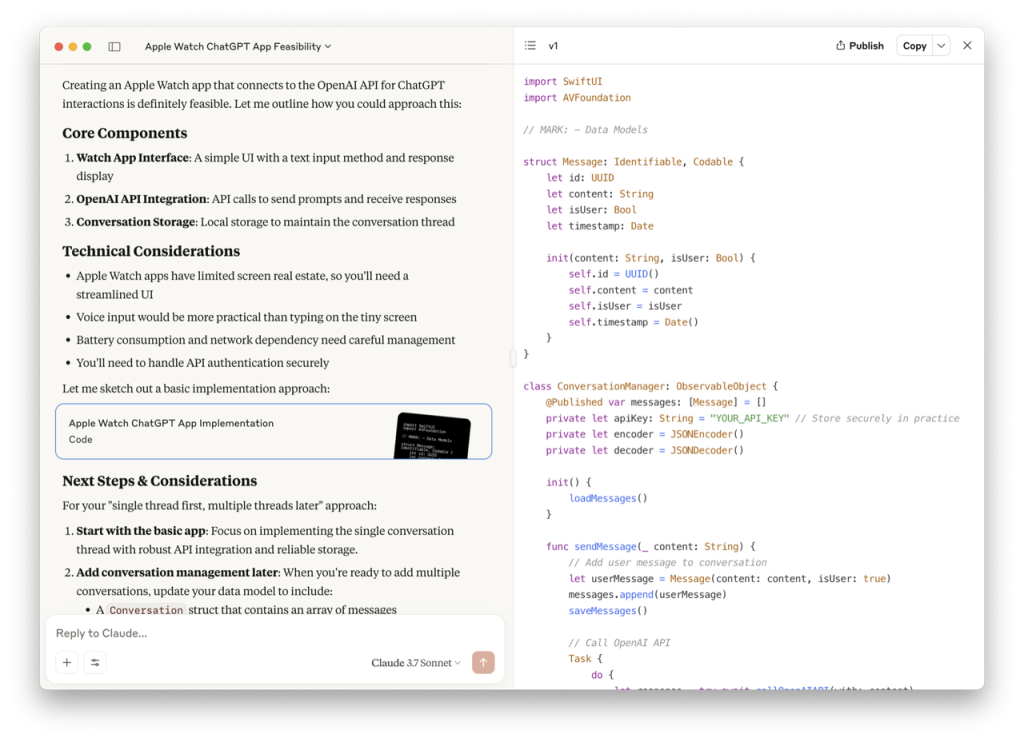

You’re going to notice what I immediately noticed. Claude didn’t just answer my question — it responded with what looked suspiciously like working code.

I would be a fool if I didn’t at least try to compile it.

If you’re a developer, you can guess what happened next. I created a new Xcode project, opened the OpenAI dashboard, generated an API key, and plugged everything in. Then I hit Build & Run.

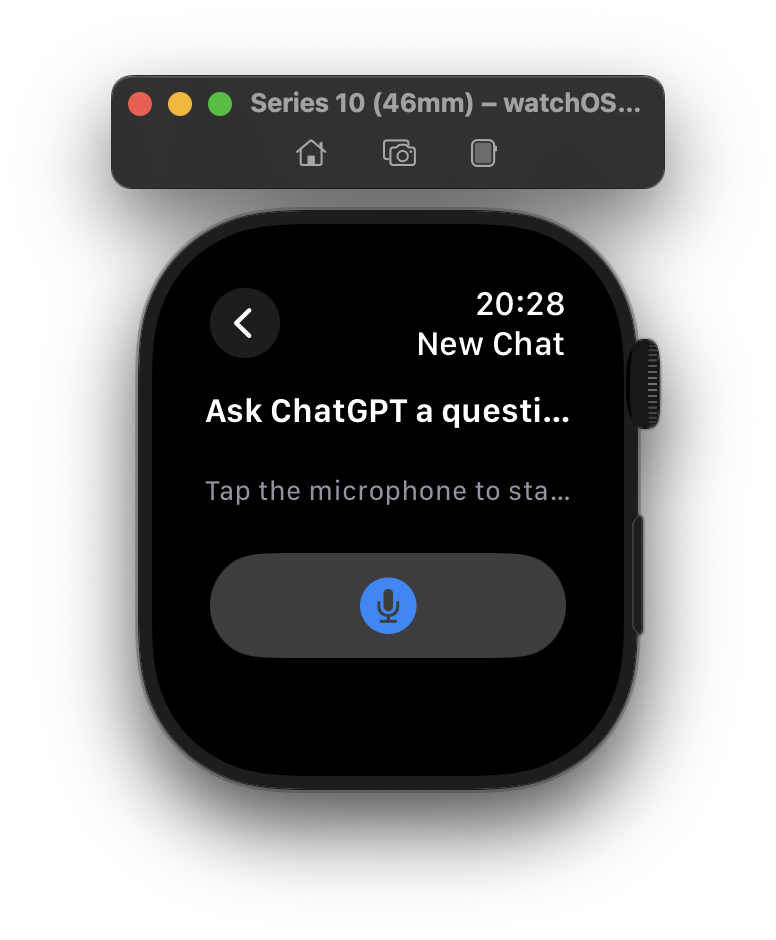

And to my complete surprise, I was staring at a working ChatGPT client running on my Apple Watch:

If you’re experiencing disbelief, you’re not alone. That was exactly my reaction too. What just happened? Did I seriously just “vibe code” an entire app?

Curiosity compiled the app

It took me a genuine minute to wrap my head around the fact that I had gone from asking a question, to having a running app on my wrist. But disbelief is just an acute form of curiosity. I had to dig deeper to understand what just happened.

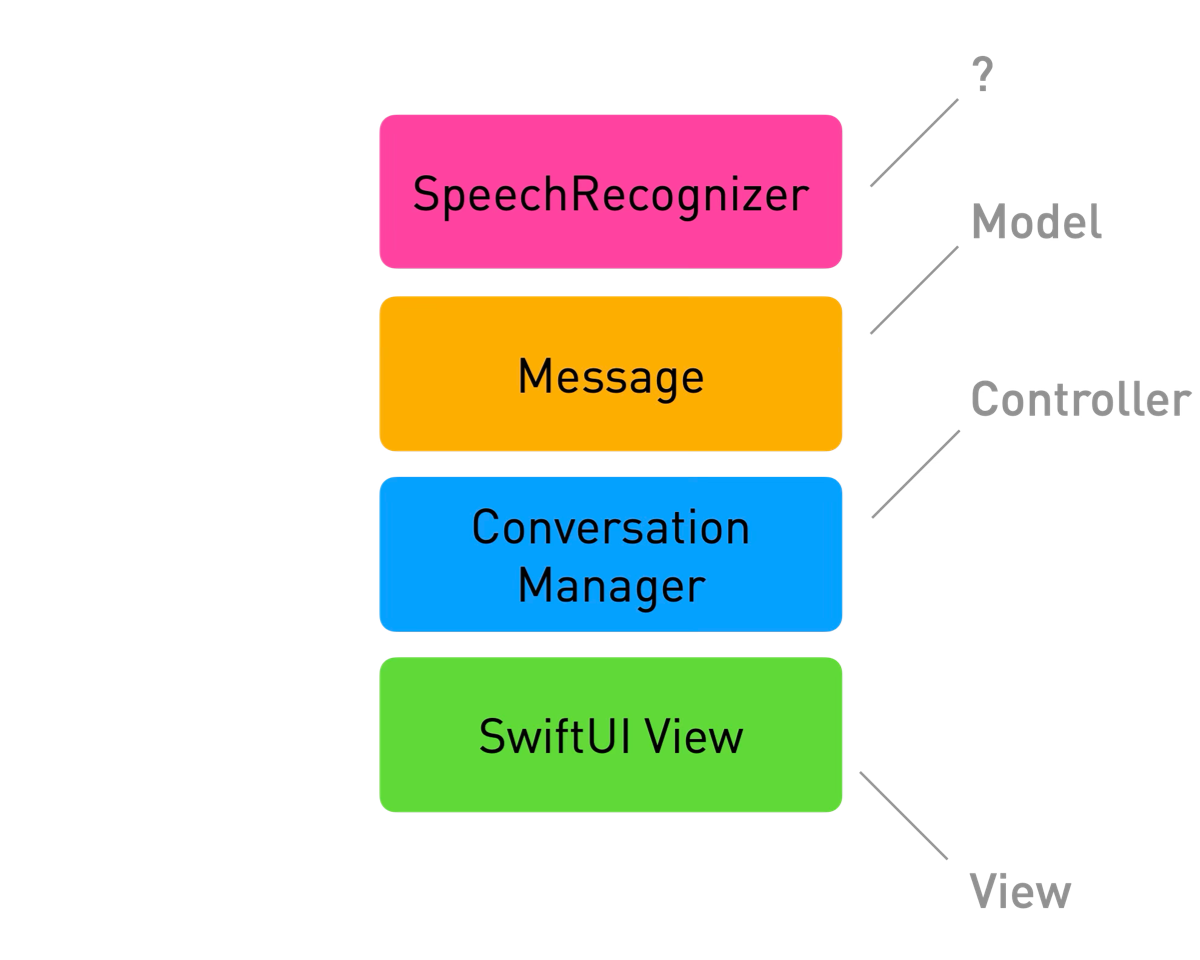

Based on the code it generated, here’s an architecture diagram of what Claude effectively built from that one prompt. It looks pretty decent, and it’s a good start — which is what made it all the more interesting.

Ngl, it’s a little scary that vibe coding can output a better structured application codebase than many of the apps I’ve seen in my career. Maybe because there’s so much written advice about separation of concerns that humans choose to ignore, but AI is just trained to think is normal?

This looks like a very decent and reasonable model (that isUser Bool will come back to bite us later, however.)

struct Message: Identifiable, Codable {

let id: UUID

let content: String

let isUser: Bool

let timestamp: Date

init(content: String, isUser: Bool) {

self.id = UUID()

self.content = content

self.isUser = isUser

self.timestamp = Date()

}

}Now what about the real brains of the operation, Conversation Manager? Will it be as well structured? Spoiler: yes and no.

There was a lot going on here, which I’m not going to share in detail (feel free to vibe one yourself) — but the simplified overall class looked something like this:

class ConversationManager: ObservableObject {

@Published var messages: [Message] = []

private let apiKey: String = "YOUR_API_KEY" // Store securely in practice

init() {

loadMessages()

}

func sendMessage(_ content: String) {

// save message to User Defaults

// Call OpenAI API in a Task

Task {

do {

// call OpenAIAPI

} catch {

// dispatch an error on the main thread

}

}

}

private func callOpenAIAPI(with prompt: String) async throws -> String {

// compose a URL request (with the hardcoded tokens!)

// Add the latest user prompt

// send it

// return the response as a String, or throw an Error

}

private func saveMessages() {

// persist to User Defaults

}

private func loadMessages() {

// read messages from User Defaults

}

}In some ways, it was better than expected. The structure was clean, Swift idioms were mostly followed, and the code was readable. But in other ways, it immediately revealed the gaps in AI-generated code, things you wouldn’t notice unless you’ve actually tried to build and ship a product.

What Worked (and What Didn’t)

Some parts of the code were surprisingly solid:

- The overall structure made sense

- SwiftUI layout used plausible conventions

- API calls were reasonably well handled

But some gaps were immediately obvious. For starters, what’s a Speech Recognizer?

The AI didn’t seem to know either, it just thought that we’d need one. This is the un-redacted exact code that was generated verbatim:

class SpeechRecognizer {

private let speechRecognizer = SFSpeechRecognizer(locale: Locale(identifier: "en-US"))

private var recognitionRequest: SFSpeechAudioBufferRecognitionRequest?

private var recognitionTask: SFSpeechRecognitionTask?

private let audioEngine = AVAudioEngine()

func startRecording(completion: @escaping (String) -> Void) {

// Request permissions and start recording

// Implementation details omitted for brevity

// In a real app, you would implement the speech recognition logic here

}

func stopRecording() {

audioEngine.stop()

recognitionRequest?.endAudio()

}

}As you might, um, recognize – SFSpeechRecognizer is an actual framework that exists, that you can use to check for the availability of the speech recognition service on a device, and to initiate the speech recognition process.

What you (and the AI) might not know is that although it was introduced around iOS10, it is not available on watchOS.

This is a small example of a key limitation: AI today is still mostly surface-level. It can mimic patterns it’s seen before, but on platforms like watchOS where the conventions are specific and the documentation ecosystem is more fragmented, it’s easy for the model to miss the simplest path.

This isn’t a knock against AI, just a reality of how siloed Apple development tends to be. It’s something I spent time addressing when I worked on gptsfordevs.com — a tool to bridge exactly this kind of gap (which is offline right now, but I’m working on bringing it back soon!)

Engineering an MVP

With a working app on my wrist, the next step was making it slightly less fragile.

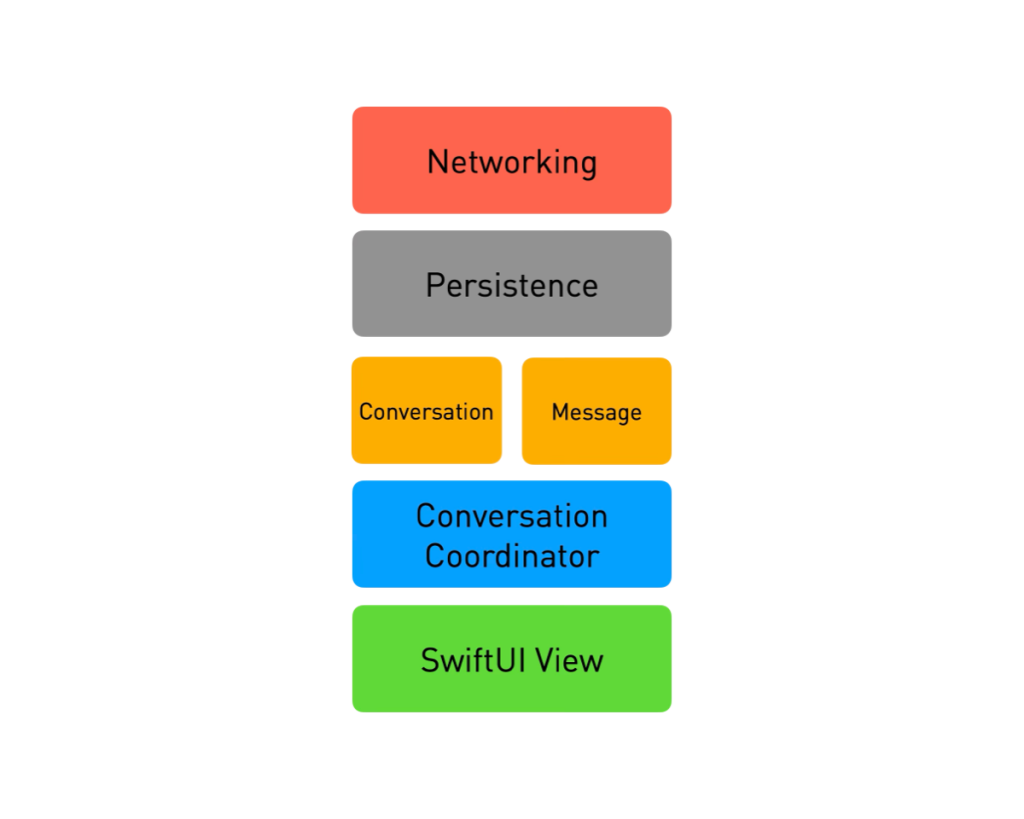

I decided to add persistence with SwiftData, introducing two simple model types: Conversation and Message. With this, I could finally restore conversation history across launches. The architecture now had some bones: a proper persistence layer, a simple conversation coordinator to manage flow, and a basic networking layer to call the OpenAI API.

Separation of concerns FTW!

The API key was still hardcoded at this point, which wasn’t ideal, but I let that slide for now, to keep moving the little guy forward.

The initial UI was functional, but terrible. The mic button consumed half the screen, and responses were truncated and barely readable. It proved the concept, but it was far from usable and nothing like a native watchOS interface.

So I went back to school on designing for Apple Watch. I thumbed through the section on Designing for watchOS in the Human Interface Guidelines to refresh my memory, and fired up the Developer app cued to the relevant sessions from WWDC that covered the recent redesign of watchOS 10.

From there, it was clear I needed to make a few changes:

- Adjust the layout to make better use of screen space

- Fix the navigation to adhere to platform conventions

- Replace the oversized mic button with a compact, tappable SwiftUI

TextFieldLink

The difference was stark: the app went from “proof of concept” to “I could actually use this.” Cut to me actually using this:

These were small tweaks, but ones the AI didn’t and probably couldn’t make on its own. They required platform familiarity, design sensibility, and context around how I wanted the app to work. In other words: product decisions.

Performance Considerations: Details Matter

As I started improving the UI, I discovered several performance issues. For one thing, I noticed the cells were sorting the entire conversation list on every refresh. Not ideal for a tiny device with limited processing power.

Section(header: Text("Recent Conversations")) {

ForEach(store.conversations.sorted(by: { $0.lastUpdated > $1.lastUpdated })) { conversation inDon’t do this.

Addressing these seemingly small optimizations make a huge difference in the user experience on a constrained device like Apple Watch.

Innovation starts with a pain point.

Getting to a prototype like this would normally take a team at least a week of focused work. With AI, I had something running on my wrist in a single evening.

I went from wondering if something was even possible to shaping a real product — with a working version in front of me to try, test, and iterate on.

That’s what makes AI so powerful in early product discovery. With new technology, especially something this unfamiliar, you can’t always think your way into an insight. Sometimes, you need to build it, try it, and see how it feels. Having something real gives you a way to learn what might actually be useful.

Once I experienced that, I started thinking about how it could help others too. That shift in focus is the real value.

We cannot predict the future, but we can invent it.

Dennis Gabor

AI speeds up the feedback loop. It helps you test ideas quickly, learn from missteps, and glimpse new opportunities. But turning those insights into something people want still takes product judgment, thoughtful design, and iteration.

In a matter of hours, I had something that technically worked, looked halfway decent, and restored state across launches. The temptation was to keep hacking away. But it was time to zoom out and ask the bigger questions:

- What is this for?

- Who could benefit from using it?

- How will they use it in the real world?

I started testing the app in my own day-to-day routines. It quickly became clear there were moments walking the dog, stepping into the kitchen, between meetings, where pulling out my phone felt like too much, but raising my wrist was easy. And I started to think about more and more places and situations this could be useful.

This was no longer a weekend hack. It was the start of a product.

If that sounds interesting, sign up below to get Part 2 when it drops. And tell me what you’d like to hear more about!

But first…

Try it yourself

I’d love for you to try out the app for yourself, and share your thoughts on:

- How and when you use it?

- What features you’d like to see?

- How it could better fit into your workflow?

It’s been a hectic few weeks, with a lot of work to turn this into a real product that you can use every day.

I’m proud to say that WristGPT is now available on the App Store!

Download WristGPT now on the App Store →

In Part 2, I’ll dig into what happened next: adding one-tap access with Complications, securing the API key with AIProxy, syncing data with CloudKit and App Groups, and designing for handoff to iPhone. All of this is just the foundation!